Performance Testing Your Applications

Asynchronous programming brought about net improvements of efficiency in web applications. It also spawned new geekish obsessions, such as how to squeeze a few thousand requests per second more out of a given framework, at the expense of another. While these rivalries are ultimately healthy for the advancement of better solutions, they are often anchored in the realm of pure theory, and do not reflect the de facto performance of your application.

Around the turn of the post-millennium decade, the "enterprise web" technologies, and the veteran testing tools accompanying them, such as Apache Benchmark, felt obsolete and inadequate. Good riddance, I say ! The past few years have seen quite a few testing tool emerging in the performance testing space, and they hold excellent promise, particularly because they are built on inherently scalable technologies.

This article goes over a few key concepts to take into consideration when testing your application or services. It also enumerates a few of the tools used for testing, and shows practical usage scenarios for some of them.

Code Before You Test

It is advisable to have most of the functionality in place when we start testing. Before we start employing load testing tools, it's good to have a rough idea of the amount of internal computation time each endpoint takes. That means the end-to-end time that we theoretically control, from the moment the request is received, to the moment the response is packaged and ready to be sent, through the wires or the waves, towards the requester.

Assuming we have a microservice endpoint named "Stuff", and a handler method HandlerForStuff, it is a good idea to load a time stamp variable with the current time as the first line of the request method. Then, just before the response is being written to the http output stream, find a way to embed the time difference inside the response. Do not log it out to console. During performance testing, anything logged to console could slow things down considerably.

In Go, for example, this is how we can quickly calculate the time spent inside a single request handler which returns the content packaged as JSON:

func HandlerForStuff(w http.ResponseWriter, r *http.Request) {

startTime := time.Now()

// do various stuff as per application's demands

...

// set the JSON header with the status code

WriteJSONStatus(w, httpStatusCode)

// If the application is in debug mode, inject the time elapsed

// inside the json output

if config.IsDebugMode() {

elapsed := time.Since(startTime)

milisecs := elapsed.Nanoseconds() / 1e6

jsonifiedFeed = []byte(strings.Replace(string(jsonifiedFeed), "{",

"{\"DebugTimeElapsedInternally\":\""+strconv.FormatInt(milisecs, 10)+" ms\",\n", 1))

}

// write the JSON feed and good bye

_, err := w.Write(jsonifiedFeed)

if err != nil {

log.Error("server.HandlerForStuff route - w.Write", err)

return

}

}

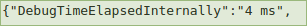

That will print for us the time spent by the app with the inner workings of the specific endpoint. In this case the operation takes approximately four milliseconds:

This is crucial, because it help us differentiate between the internal computation time and the overall response trip time. The difference between those two is occupied by the latency (time spent by the response on the network infrastructure).

If you are able to deploy your application on a far away server, do so as soon as you have a beta version, with the time elapsed code in place. On the internal network, there would be just 1 or 2 more milliseconds latency in addition to the 4ms taken by the service to prepare the response. This often creates a false sense of comfort.

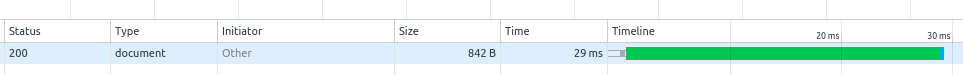

Let's assume we hit that microservice endpoint residing on a server in Atlanta, Georgia (in the U.S.) from Toronto, Ontario (Canada):

As you can see, the latency component can be disproportionately high, and the geographical distance is one of the fixed costs. For more (extremely detailed) information on this, I highly recommend Ilya Grigorik's book, High Performance Browser Networking. I believe it is also available for browsing online.

So, have we "wasted" a good 25 milliseconds on the trip from the server to a computer - in this case - a few hundred miles away ? Not necessarily. This is a good place to point out the differences between the blocking I/O servers, and the more recent async frameworks, which bring significant improvements:

A traditional PHP, Java or .NET application would have latched onto a thread for the whole 29ms-30ms duration, in an completely blocking fashion. The client connection gets accepted, the request read, parsed and processed, the response generated and written to the connection, which is eventually closed. Then, the next connection gets handled, and so forth.

To mitigate this, web servers such as Apache spawn multiple threads, which take turn to execute on the actual CPU cores. Even if we consider an optimistic average of 30ms per request, we only have 1000ms in a second. This gives us 1000 divided by 30 =~ 33 requests per second per CPU core, in an ideal (unrealistic) scenario, where we do not account for the context switching costs. Eventually, under heavy load, too many spawned threads run the risk of the entire server grinding to a halt.

The modern approaches, either event loops in NodeJS or Vertx, or greenlets / coroutines in Python or Go, has the current thread cede control of the execution to another chunk of code when the previous one blocks while waiting for I/O operations to finish. This makes the application server much more efficient under heavy load, and much less prone to buckle and break down.

Even though one request-response roundtrip takes about the same time as the completely blocking alternative, calculating the maximum requests per second rate is not a straightforward proposition. The nature of the multiplexed connections is such that we can have a full stack of those finishing almost at the same time, depending on the nature of the application. Perhaps the first response returns after 30ms, but the subsequent 199 responses all return in the next 15ms, courtesy of the non-blocking manner in which they negotiate the execution context. So for these situations, only proper load testing under varied scenarios can help set the expectations.

Load Testing Tools

There are fortunately quite a few testing tools to choose from nowadays, each covering various facets of load testing. Many of them can be extremely useful, helping you discover unexpected weaknesses in your application, and expose performance bottlenecks that you can improve, while still in development.

It is not a good idea to run the test tools and the targeted server application on the same machine. Both the tool and the server will compete for the suddenly scarce computing power and the results will be distorted, unreliable, and usually disappointing.

Initially, try to run the tests using an internal network. Those tests will not always expose accurate latency metrics, but are very helpful for detecting a class of problems particular to the intensive development cycle, such as crashes due to race conditions, and memory leak buildups. Then, for a real-life experience, involving network transport latencies, the test machine should be geographically far from the server.

Vegeta

Vegeta is a tool that simulates a certain number of request hitting your service at a relatively constant rate. It is a very useful tool to identify a reasonable worst-case scenario ceiling, above which your service cannot perform well on a given server, with certain resources. Being a very resource-frugal lightweight tool, written in Go, means that you can simulate in excess of 1000 requests per second from a single testing machine.

The tool can save the results as a report, and incorporates a chart plotting component that can render those results to a self-sufficient html page.

You can create a shell script, say startVegetaTest.sh and run it to have both testing and results html generated in one go:

#!/bin/sh

# attack with 500 requests per second for 10 seconds, and generate report file afterwards

# replace the my.service.server/service location with your own one

echo "GET http://my.service.server/service" | vegeta attack -rate=500 -duration=10s |\

tee results.bin | vegeta report

# transform the results into an html page

cat results.bin | vegeta report -reporter=plot > plot.html

Wrk

Wrk is another great tool that takes a slightly different approach. Rather than attempting to maintain a constant request-per-second throughput, it keeps a specified number of connections open, and utilizes a variable amount of threads as indicated by the user. Needless to say, the more available cores the wrk machine (client) has, the more accurately it is able to simulate a large number of concurrent connections.

In the example below, on a four-cores machine, I am using 3 threads (no. cores minus one), allowing, theoretically, one core to the operating system:

./wrk -t3 -c300 -d10s http://192.168.1.75:8081/service/myservice

There are a few other command-line tools, which I tried, and found useful; most are written in C, Python and Go:

Boom (Python)

Boom (Go)

Weighttp

All these tools are very well suited to granular performance testing: we are targeting specific endpoints and want to gather their optimal throughputs and breaking point thresholds.

To list a couple important points, worth remembering:

- Allot a dedicated machine for the "bombardment", or at least do not use your CPU intensively if you are running the test from your development box.

- Some of the testing tools are naturally using all the available CPU cores. In particular, the Go-based tools can be easily directed to appropriate a certain number of cores. That is not the case for some C and Pyton-based tools. For those you need to use taskset and run your testing processes in parallel, each on a dedicated core.